A Trust-Centric Approach to ML-Enabled Data Consulting

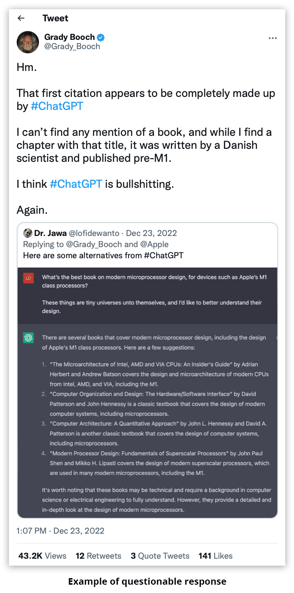

The ChatGPT wave rolled through social media this Fall with an incredible amount of wonder and awe. AI and ML influencers embraced the dream that Large Language Models (LLMs) will replace Google and other research tools. However, sprinkled in those posts were also examples of questionable responses from the AI engine – inaccuracies that were presented in a way that sounded factual. Lack of citations and oblique training methods are being called out as risks to relying upon LLMs for critical decision making. These debates led me to think about how important trust is when it comes to data analysis and mission-critical business decisions. The AI landscape is rapidly accelerating – there are already variants of ChatGPT that provide references - yet it will take time for organizations to embrace this type of AI for high-value high-consequence decision making. In my experience there needs to be three pillars of trust in any data consulting methodology: traceability, versioning, and explainability.

The ChatGPT wave rolled through social media this Fall with an incredible amount of wonder and awe. AI and ML influencers embraced the dream that Large Language Models (LLMs) will replace Google and other research tools. However, sprinkled in those posts were also examples of questionable responses from the AI engine – inaccuracies that were presented in a way that sounded factual. Lack of citations and oblique training methods are being called out as risks to relying upon LLMs for critical decision making. These debates led me to think about how important trust is when it comes to data analysis and mission-critical business decisions. The AI landscape is rapidly accelerating – there are already variants of ChatGPT that provide references - yet it will take time for organizations to embrace this type of AI for high-value high-consequence decision making. In my experience there needs to be three pillars of trust in any data consulting methodology: traceability, versioning, and explainability.

Traceability (Where did the data come from?)

Trust in data analytics starts with the confidence that the source of the information is known and accurate. In software we have a term for this – traceability. A beautiful visualization will only get you so far if you can’t show the data to back it up. To ensure this, the data points in that visualization should have links back through every stage of its transformation until you get to the “ground truth” set of source data.

Examples of ground truth data are:

- PDF files of scanned legal documents

- User reviews captured from a survey

- Geo coordinates corresponding to point-in-time events

- Peer reviewed academic papers

Each one of the examples above may contain one or many data points that can be used in decision-making analytics. One technique to capture those data points is annotation. End users (who have ground-truth knowledge or expertise) can highlight relevant points of data and classify the data as required. This is called annotating. Annotations are assigned unique identifiers and have links tracing them back to their source document(s). From this point on, when a datapoint is used for analytics the source for that data can be easily referenced.

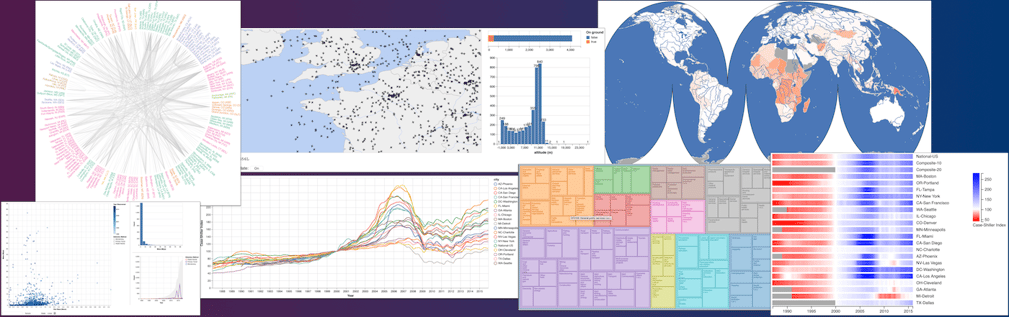

Another methodology that is helpful in ensuring traceability is building a hierarchical model of the data. It is a bit old-school, in today’s world of NoSQL and unstructured data, however when I start a data consulting project, one of the first things that I do is try to identify natural hierarchies in the data. Typically, this hierarchy can be formally captured in an object model that is maintained in a database. The advantage of maintaining a parent-child model in this manner is that we can use the hierarchy in visualizations. Tree maps are helpful visualization techniques to demonstrate how data is grouped and linked together. Often these views match the mental model the client has of their business data.

I have found that clients are engaged, and feel at ease with, my recommendations when they can see that the data can always be traced back to its source, and the natural hierarchies in their data are visible.

Versioning (Has my data changed? Are we using the most recent version?)

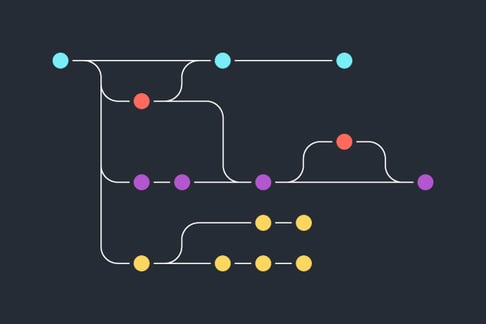

Data is constantly evolving. Depending upon the industry, last week’s information may be too old to rely upon. Therefore, it is important that data-centric methodologies can identify point-in-time snapshots of data, machine learning models, and the code used to analyze that data.

Data

No matter what storage system you use (S3, Sharepoint, Dropbox, etc.) documents and data files shall be baselined and tracked for changes. Versioning of database data is similar. Database records can versioned either programmatically (i.e., maintaining a primary key version history table) or using a data version control system such as DVC.

Machine Learning Models

Machine learning model versions should also be tracked and trained based upon the current state of source data (i.e., some annotations may have been added, removed, changed). This ensures that models are up to date and representative of the most accurate training data. Tools such as DVC, Pachyderm and MLFlow are examples of machine learning versioning systems.

Source Code

When it comes to source code and notebooks, Git is the industry standard. Not much more needs to be said.

The ability to confidently time-box the data and methodologies used for a report, or recommendation, is critical to ensuring customer trust in analytics.

Explainability (How did we reach this conclusion?)

One of the biggest criticisms of LLMs is the lack of transparency around how the models are trained, and what training data is being used to train them. Was the training data ethically sourced? Does the training data represent a well-rounded view of the subject being evaluated? How did the model come up with this result? These types of questions are important when performing high-impact consulting services.

One of the biggest criticisms of LLMs is the lack of transparency around how the models are trained, and what training data is being used to train them. Was the training data ethically sourced? Does the training data represent a well-rounded view of the subject being evaluated? How did the model come up with this result? These types of questions are important when performing high-impact consulting services.

As much as I love jumping straight to a pre-trained BERT model; when I start a data project, I try to see what kind of insights I can gather without using Machine Learning or AI. Explainable algorithms and statistical formulas are preferred over pre-trained large language models. The reason for this is there is no question as to the source of the data or the mathematics being used to perform the analysis.

When I do get to the point of considering AI (usually it’s when looking for clustering and classification trends) I will start with AI algorithms that are not pre-trained on external data (such as libraries from scikit-learn). If those models prove insufficient, I will jump ahead to a pre-trained LLM to see what kind of insights I can gather from my data then work my way backwards to explain the source of the LLM. When selecting pre-trained LLMs, I look for models that come from peer-reviewed data laboratories. Huggingface is quickly becoming the de-facto starting point to locate pre-trained machine learning models. Model cards are generally provided by authors who publish models on Huggingface. These cards will specify information around the source data used to train the models, and the research behind the training.

There is nothing worse in a data consulting project than presenting your findings to a client and not being able to answer the question, “how did you get to this result?” Being able to explain how I arrived at the data being presented to clients is paramount to a trust-centric approach.

Putting It All Together – Insights & Analytics Other ResourcesThe hard work that I complete behind the scenes to ensure trust doesn’t mean much to the client if they don’t know that it exists. The final piece to this trustworthy puzzle is the visualization included in the end report. It’s important that visualizations include hyperlinks to the source ground truth data. Users can click on a report and select to view the source documents, when needed. Likewise, point-in-time filters and hierarchical views of parent-child data relationships help tell the story to the user that this data is relevant and in a format that matches the users’ mental model of their business.

In the final analysis, trust is a construct or agreement between two people that may be extended to an organization or an application. Following the principles above helps build the trust into my application but the recipient’s confidence in the insight derived will most often rely on the bone fide expertise of the person delivering it. In that sense I’m building an application that leverages that trust. Telling someone “I read it on the internet” isn’t going to work.

Other Resources

Here are links to resources that I have found helpful around the topics discussed in this article:

- Explainable AI

- Ethical AI

- Traceability

- This Architecture Decision Record Template is a great starting point for documenting architectural decisions – which can be the heart of any traceability/explainability method

- Wikipedia is actually a great place to start to learn more about requirements traceability

- For light reading, you can check out the EU Commission's Ethics Guidelines for Trustworthy AI, which has a number of recommendations related to traceability

Photo Credits:

https://www.enseignement.polytechnique.fr/informatique/INF552/

https://www.perforce.com/blog/vcs/git-branching-model-multiple-releases

https://jimbuchan.com/when-the-curtain-is-pulled-back